This story's virtual reality that connects with the brain is part of CES, where our editors are going to bring you the latest news and the hottest virtual CES 2021 gadgets.

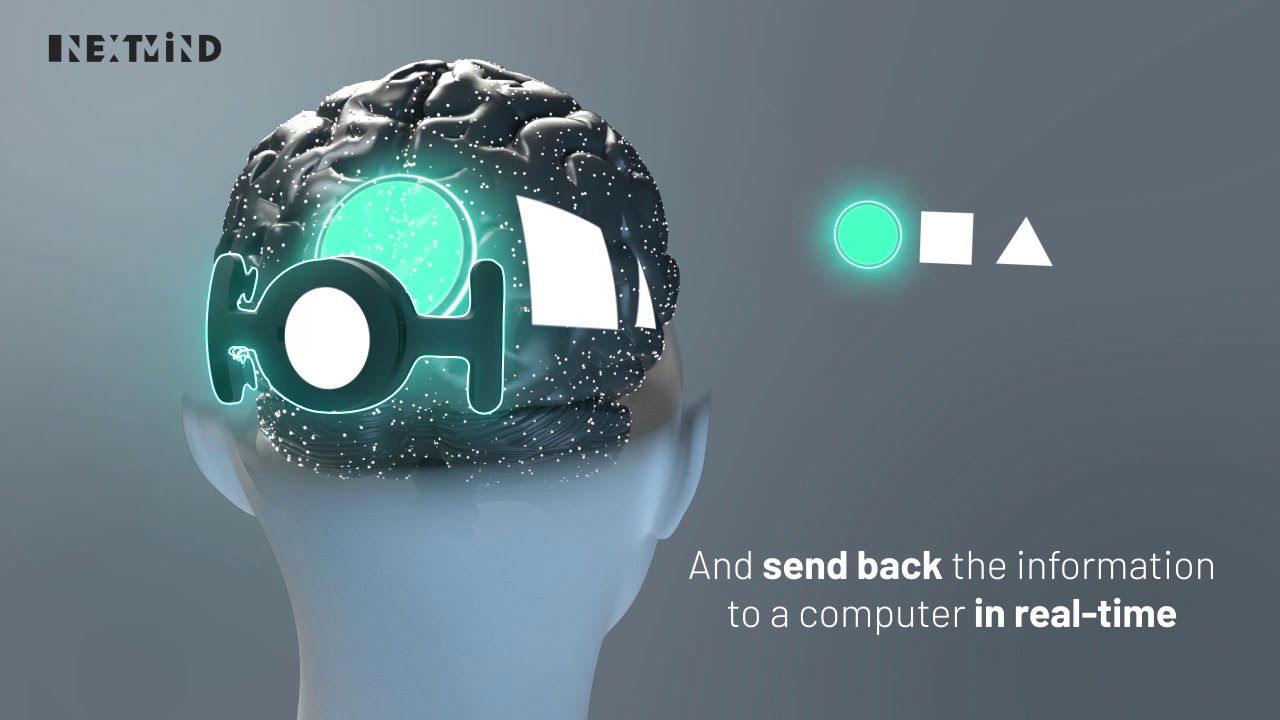

I was in a room surrounded by a virtual reality that connects with the brain, large-brained aliens with my Oculus Quest VR headset. Their heads, white and black, flashed. I turned towards one of them, staring at it. The head exploded soon enough. I was looking at the others, making their heads blow up. Then I looked across the room at a flashing portal marker and gone. Without eye-tracking, I did this. A band sensed my visual cortex and electrodes on the back of my head.

I felt like I was living some kind of a virtual version of the real-life David Cronenberg movie (the fly). But I was actually trying a neural input device made by Next Mind, in reality.

I received a large black box with a small package inside before the holiday break. A black disc featuring a headband. Small rubber-footed pads covered the disc. The $399 developer kit from NextMind VR, announced at CES 2020 a year ago, aimed at something many companies are striving for: neural inputs. To track VR attention, control objects and maybe NextMind vr aims to read the brain's signals.

The real potential and possibilities of neural input technology Next Mind VR are difficult to understand. Many of the startups in this space are also doing various things. CTRL-Labs, a neurotechnology company gained by Facebook in 2019, developed an armband that could send hand and finger. Later this year, another company, Mudra, is making an Apple Watch wristband that also senses neural inputs on the wrist.

A year ago, I wore an early version of the Mudra Band and experienced how it could interpret the movements of my finger and even measure approximately how much pressure I was applying when I squeezed my fingers. More strangely, Mudra's technology can work if you don't move your fingers at all. The programs could include helping people who don't even have hands, like a wearable prosthesis.

The ambitions of Next Mind vr seek to follow a similar assistive-tech path, while also aiming for a world where neural devices may help enhance physical input accuracy or combine with a world of other peripherals. In three to five years, the AR/VR head of Facebook, Andrew Bosworth, sees neural input technology emerging on Facebook, where it could end up being combined with wearable devices such as smart glasses.

My experience with Next Mind vr has been rough, but also mesmerizing. The dev kit has its own tutorial and includes demos that can run on Windows or Mac, plus a demo on Steam VR I played back with a USB-C cable on the Oculus Quest. The compact Bluetooth plastic puck has a headband, but with a little effort, it can also unclip and attach directly to the back of a VR headset strap.

All the Next Mind VR experiences include looking at your screen's large, subtly flashing areas, which can "click" by focusing. Or stare. It was hard to tell how to activate something, and I tried to open more of my eyes, or breathe, or focus. Eventually, eventually, the thing I was looking at would click. They really knew what I was looking at from a field of five on-screen flashing "buttons," And again, no eye tracking involved, it just lay on the back of my head.

Did it make me feel uneasy? The unsure? Oh, yeah. And when my baby came in and saw me doing this, and I showed him what I was doing, they amazed him as if I were doing a magic trick.

It does not yet mean the Dev Kit of NextMind vr for consumer devices. While launching as an Apple Watch accessory via Indiegogo's crowdfunding site, the Mudra Band is also experimental. We'll see more technology like this, I know. There was even a "neural mouse" glove at this year's virtual CES that aimed to improve response times by sensing click inputs of a hair faster than even the physical mouse could receive. I haven't tried that glove, but it doesn't sound far from what businesses like NextMind vr imagine, either.

Right now, neural inputs for vr feel like an imperfect attempt to create an input, such as algorithms searching instead for a way to do something that I would probably just do with a keyboard, mouse or touchscreen. But that was the way voice recognition once felt. And hand monitoring. Right now, the demos from Next Mind really works. I'm trying to imagine what's going to happen next. I hope more exploding heads won't be part of it, whatever it is.